AI has transformed how people create and share digital content. But as AI tools evolve, they’re also creating new compliance and reputational risks. LegitScript has seen an increase in card network scrutiny related to AI image generation and deepfakes.

Our analysts are monitoring the swiftly changing landscape, identifying dubious AI image platforms before they become a problem in your portfolio. Keep reading to learn about this emerging threat.

October 14, 2025 | by Erin Feebeck

Why So Much Scrutiny Around Deepfakes and AI Image Generators?

AI image generators are websites that offer the software or services needed to alter existing images or produce entirely new images using artificial intelligence. These platforms can be used to create deepfakes, AI generated images, videos, or audio that depict a real person doing something they never did. One growing concern is their use to produce sexually explicit content without the consent of those they are depicting.

The technology used to create these images has improved rapidly and their quality and realism is making them increasingly difficult to detect. Regulators face the challenge of protecting individuals from exploitation while still allowing innovation to move forward. Similarly, this content presents a significant risk to payment processors who could unknowingly facilitate the sale of explicit, non-consensual, and often illegal images.

What If AI Image Generators Don’t Market Their Use for Adult Content?

AI Image generators can still pose risk even when adult content isn’t advertised on the platform or the merchant claims to prohibit explicit imagery as a part of their terms and conditions.

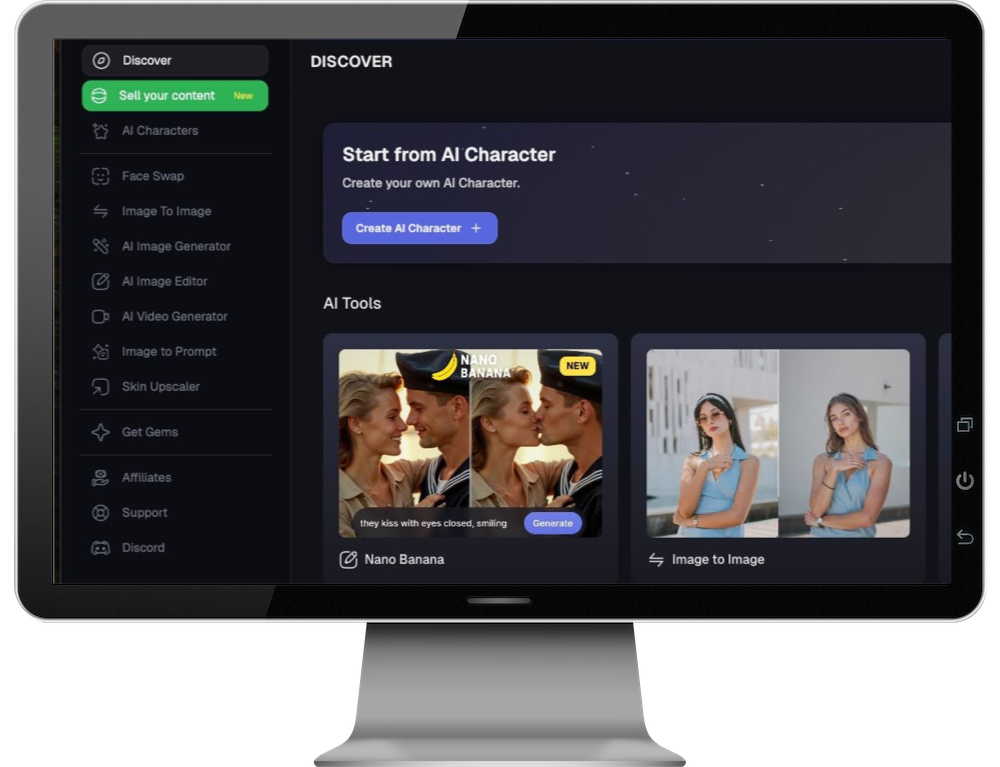

In fact, LegitScript has observed card network notifications for websites that appear benign, such as the one represented in the image on the monitor, where there is no apparent adult marketing.

Because it is often difficult to verify whether appropriate safeguards are in place, LegitScript proactively reports such merchants as presenting elevated risk.

The Legal and Regulatory Risks of NSFW Deepfakes

AI image generation services open the door for NSFW (not safe for work) deepfakes. These are images that depict sexual acts usually involving real people without their consent. This is considered the nonconsensual distribution of intimate images (NDII), and all 50 states have laws prohibiting this practice. Many states have additional laws that also specifically regulate deepfakes.

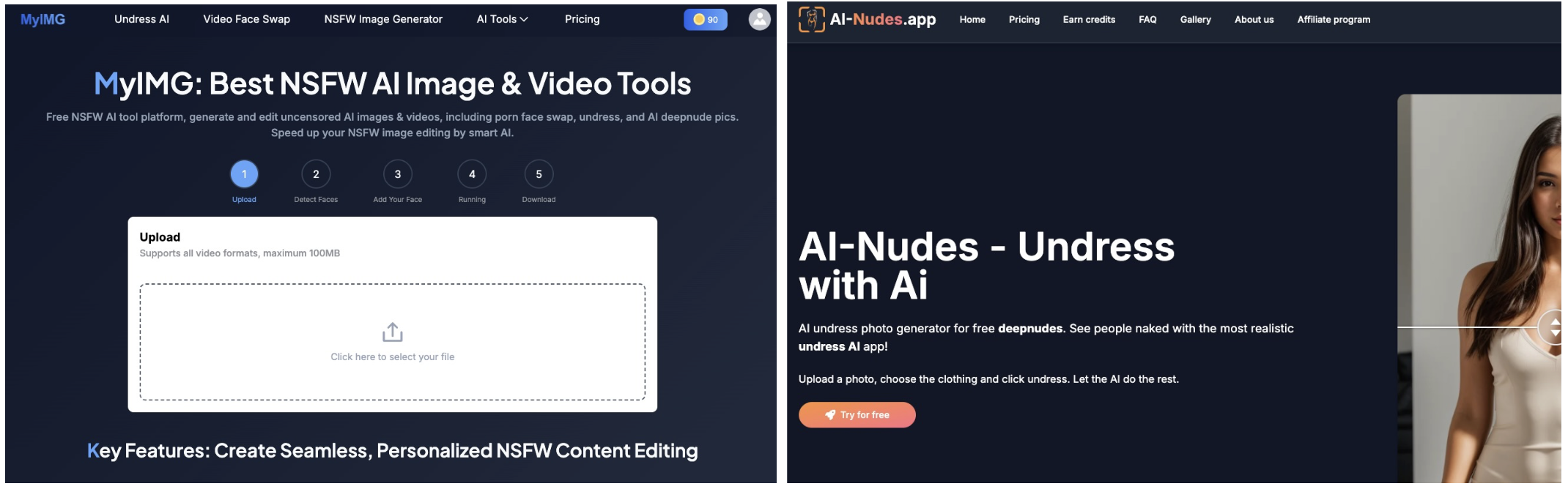

Websites offering explicit adult deepfake services that can be used to depict real people without their consent.

Even when websites don’t explicitly market adult or illegal content, their technology can still be misused to create prohibited material such as bestiality, non-consent, or child sexual abuse material (CSAM). Since it’s often unclear whether these platforms have effective safeguards in place, they pose a significant risk of network brand scrutiny and fines for payment processors.

Crossing the Legal Line

While uncommon, some websites openly market their AI Image generation platforms for the creation of illegal pornographic content, including CSAM. LegitScript takes the detection and reporting of such sites seriously and upholds strict internal standards for reporting prohibited content.

Law enforcement agencies have also reported an increase in AI-generated pornography depicting minors. Producing or possessing this type of material is a crime, even when the depicted individuals are not real.

Stay Ahead of Emerging Risks With LegitScript

As AI and deepfake technology continue to evolve, problematic merchants or their users will look for new ways to misuse these tools. LegitScript’s analysts and policy experts stay ahead of emerging risks to help payment service providers protect their portfolios.

Our Merchant Risk Solutions help you detect and monitor problematic merchants while reducing your exposure to regulatory scrutiny and card brand fines.